Keynote slides, ready when you are — click to begin

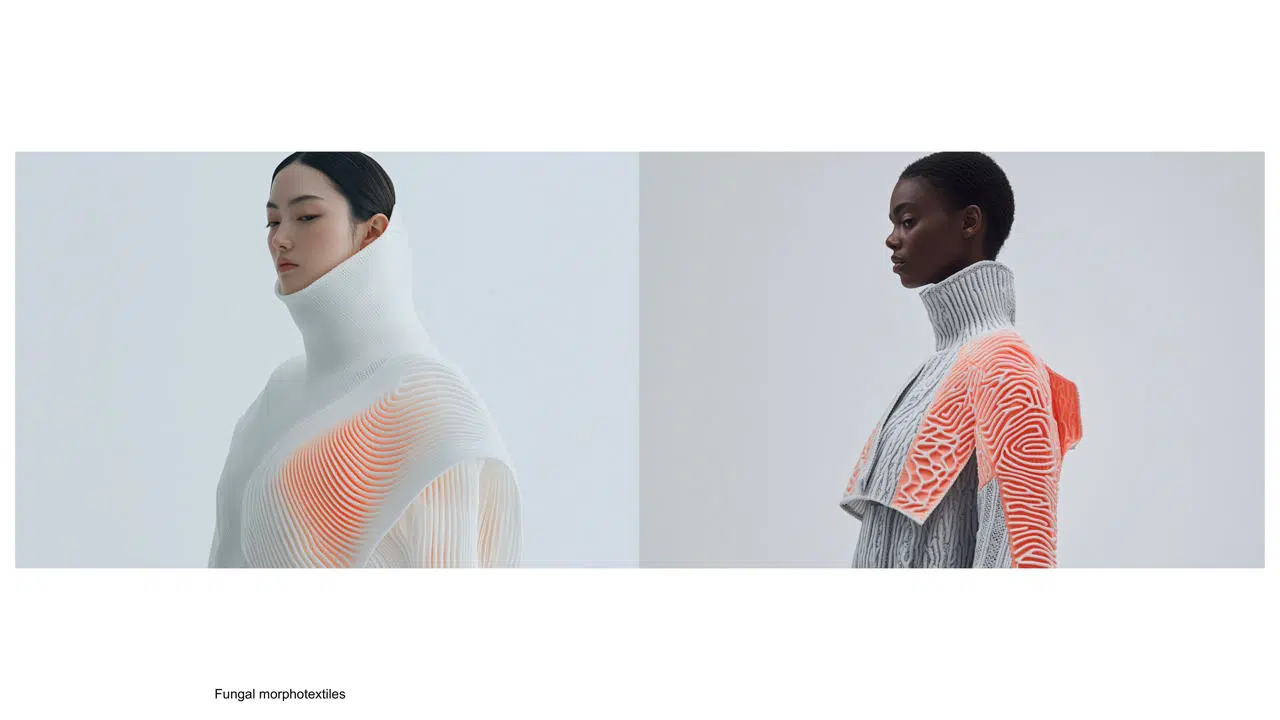

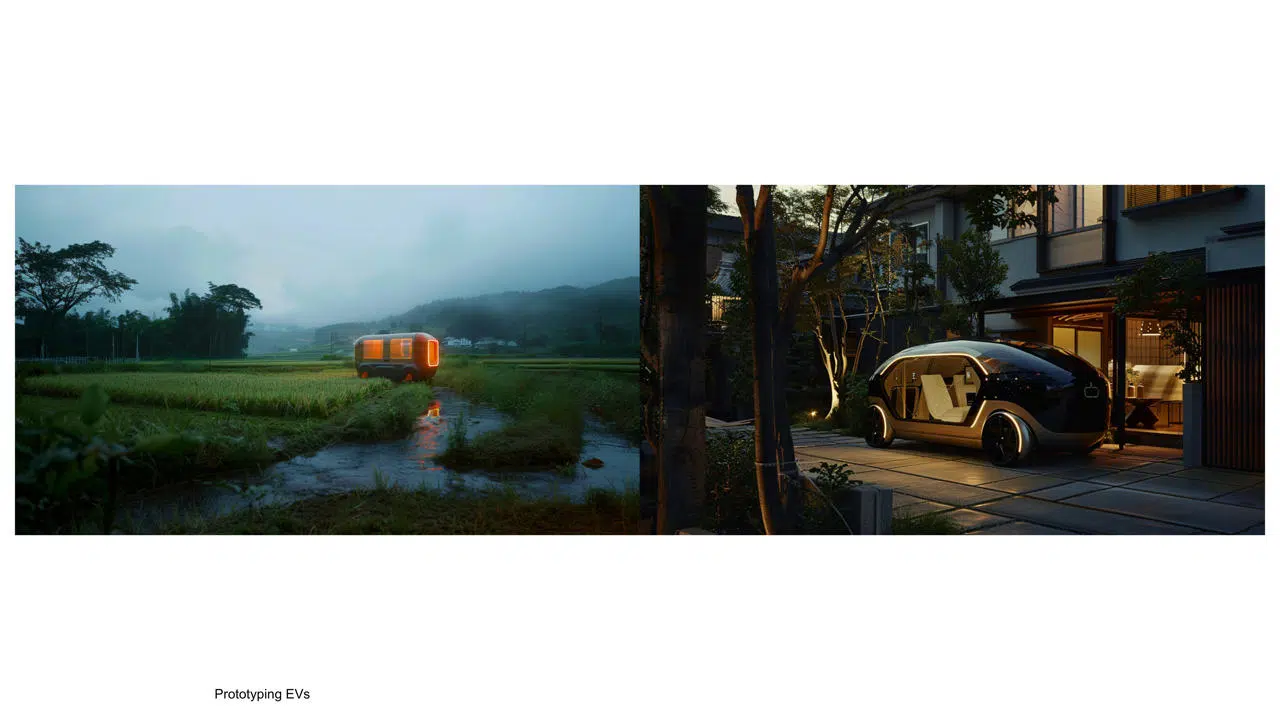

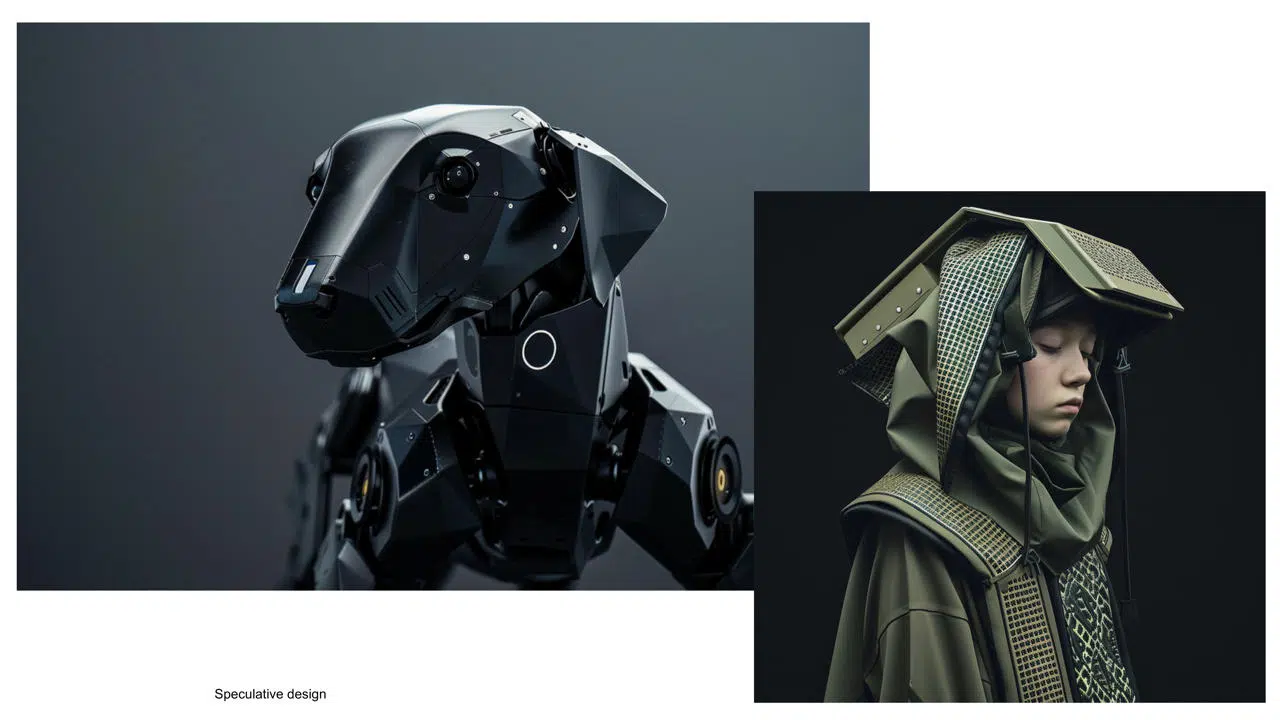

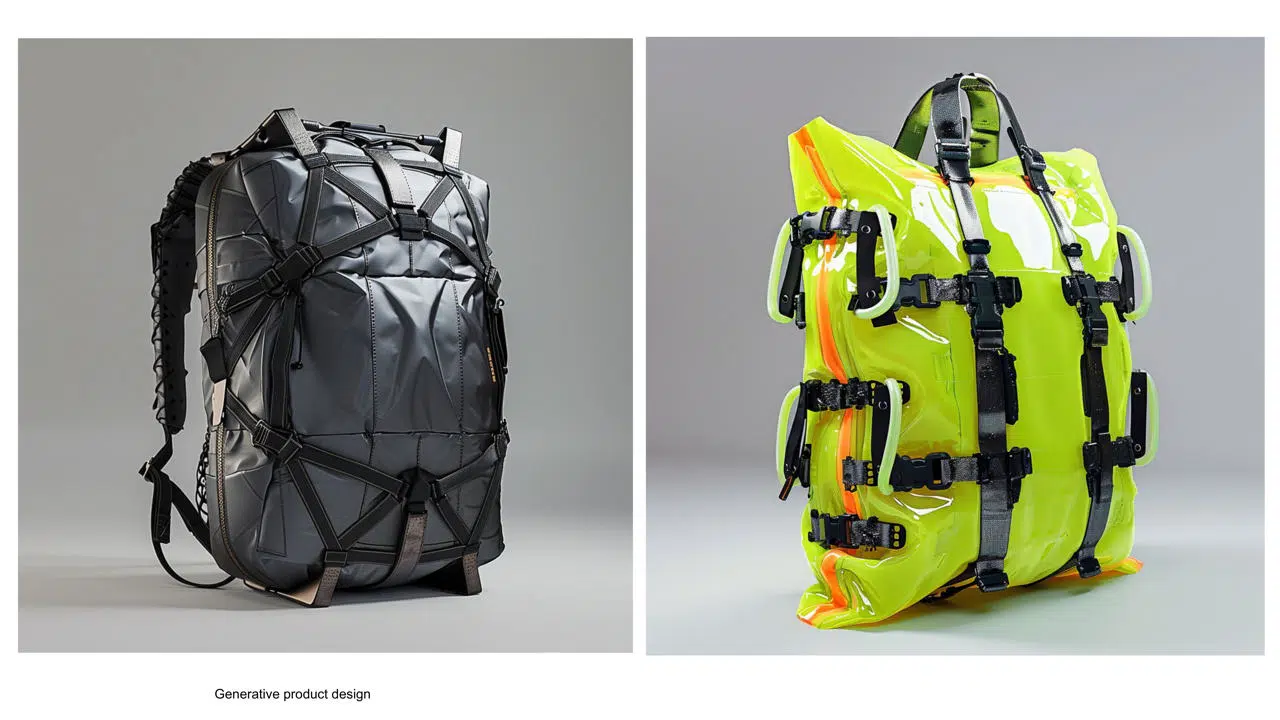

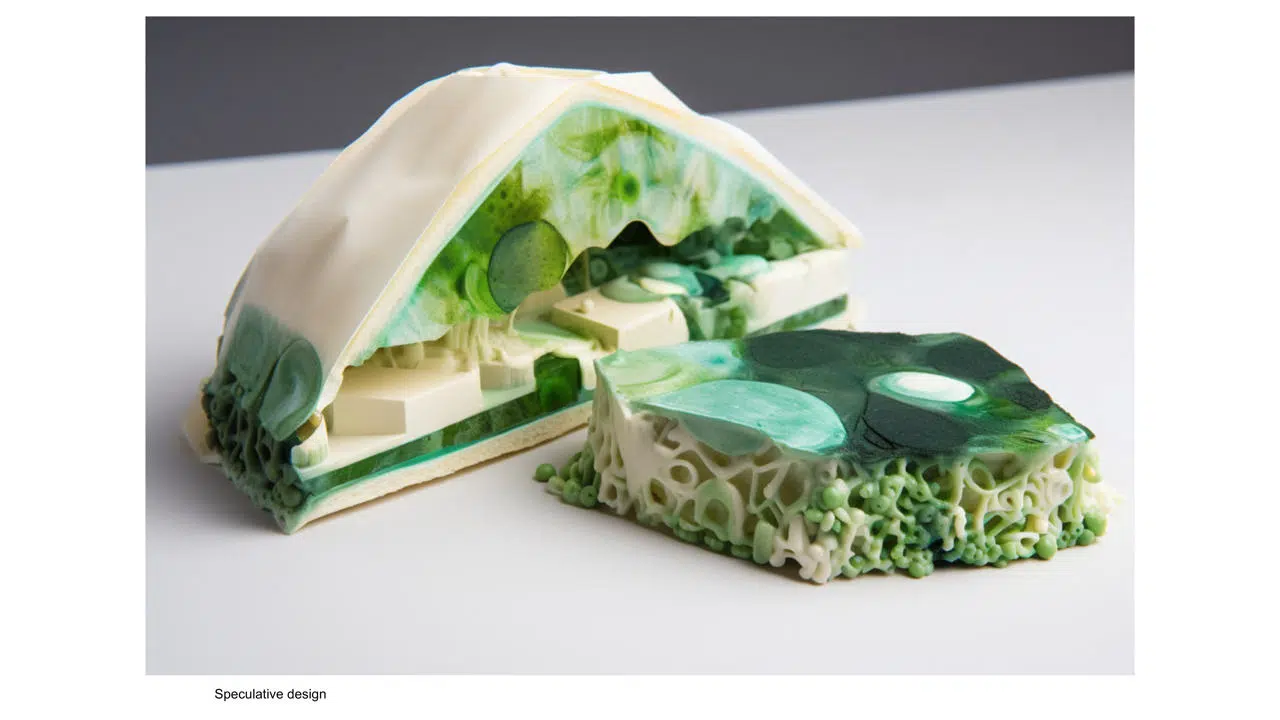

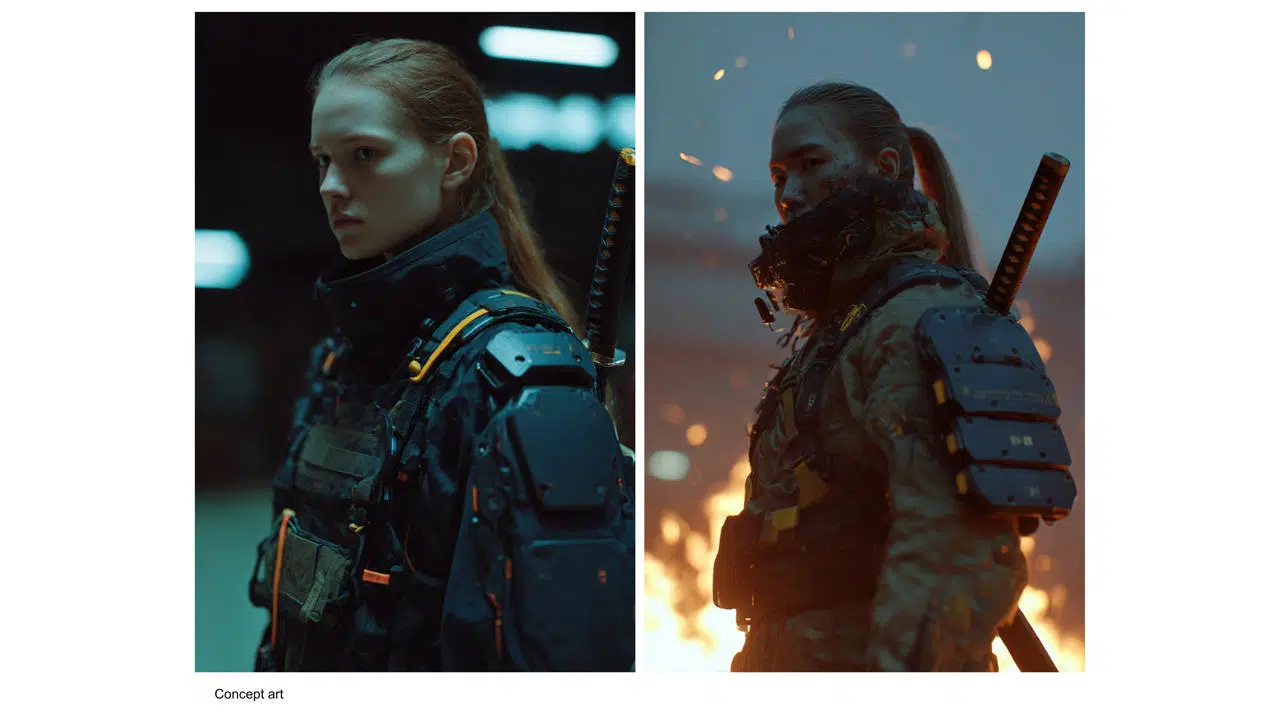

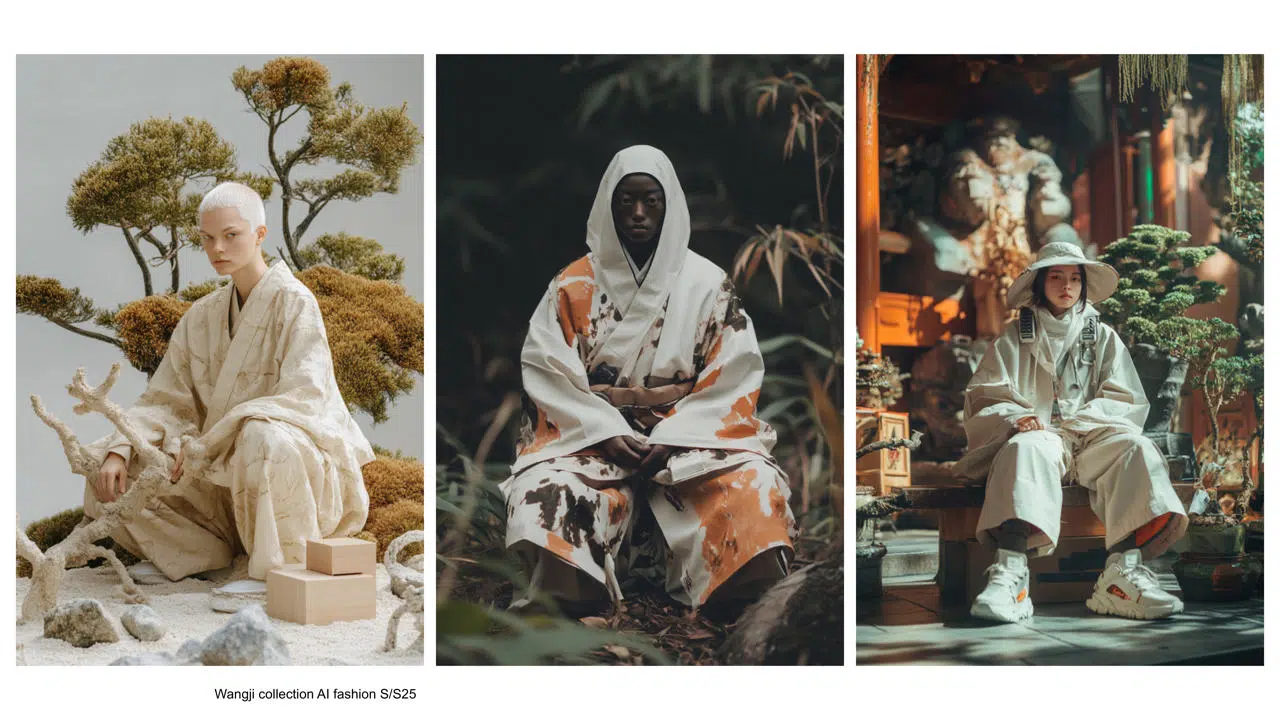

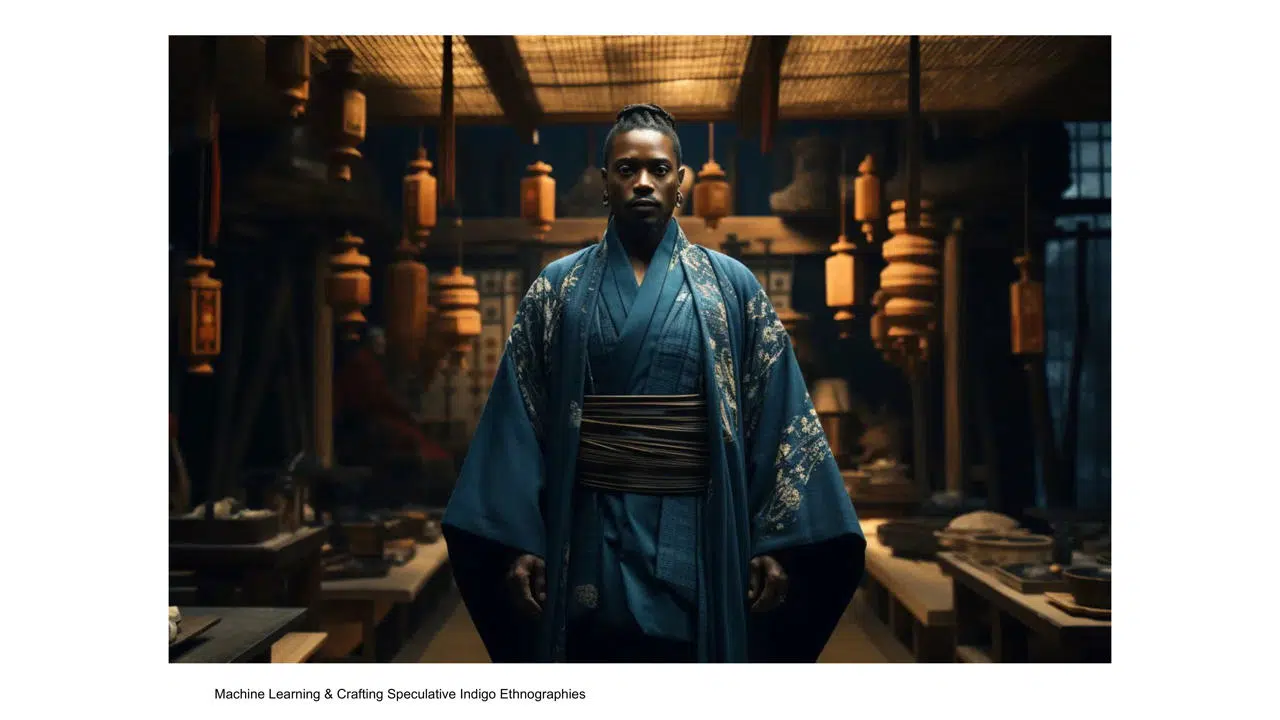

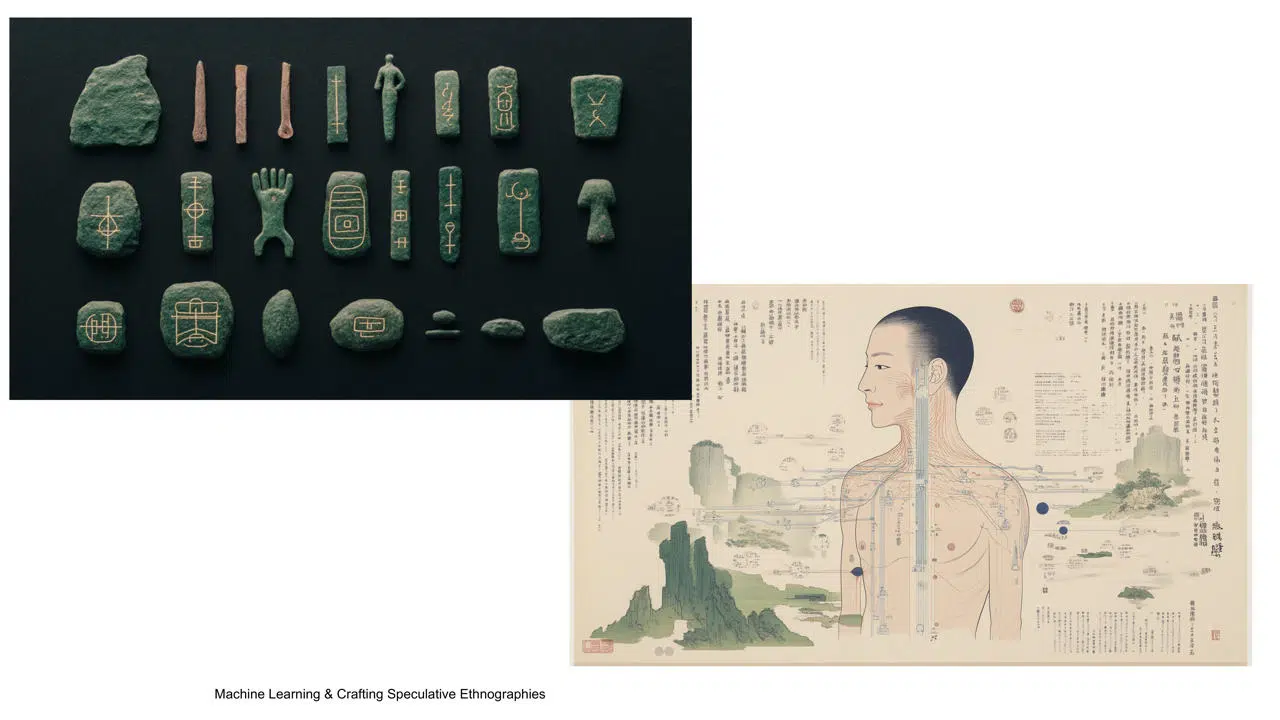

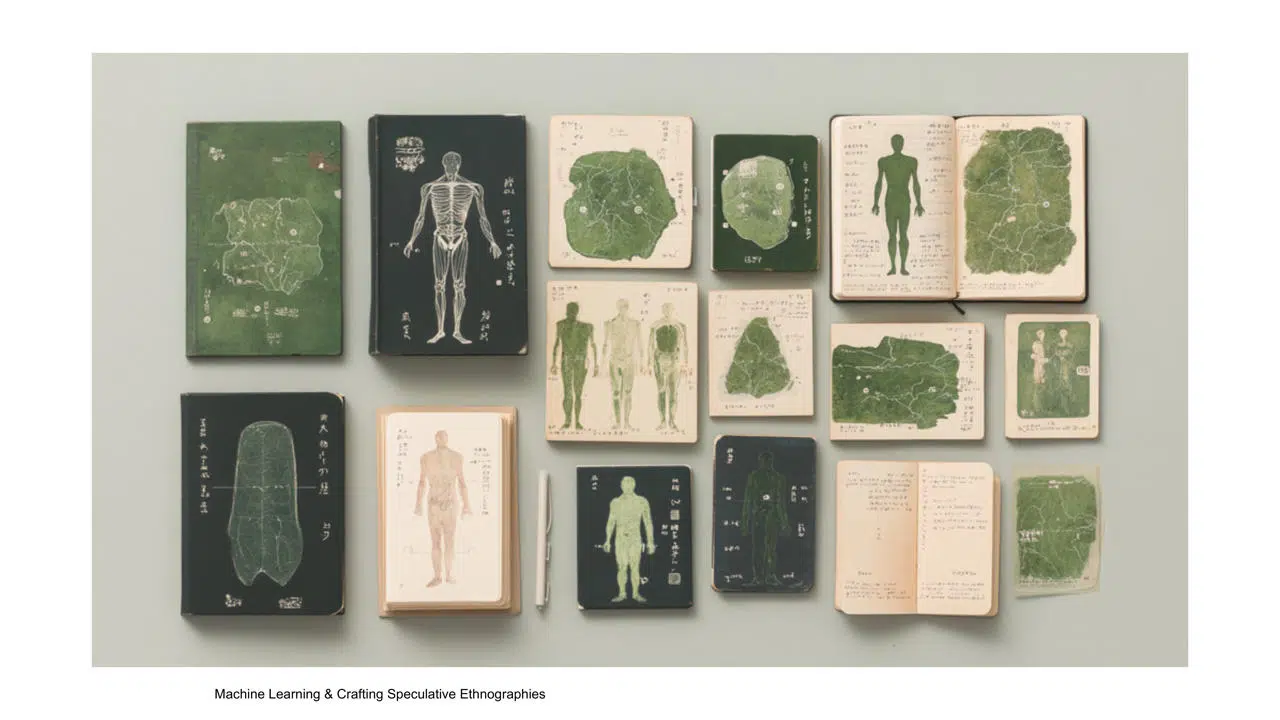

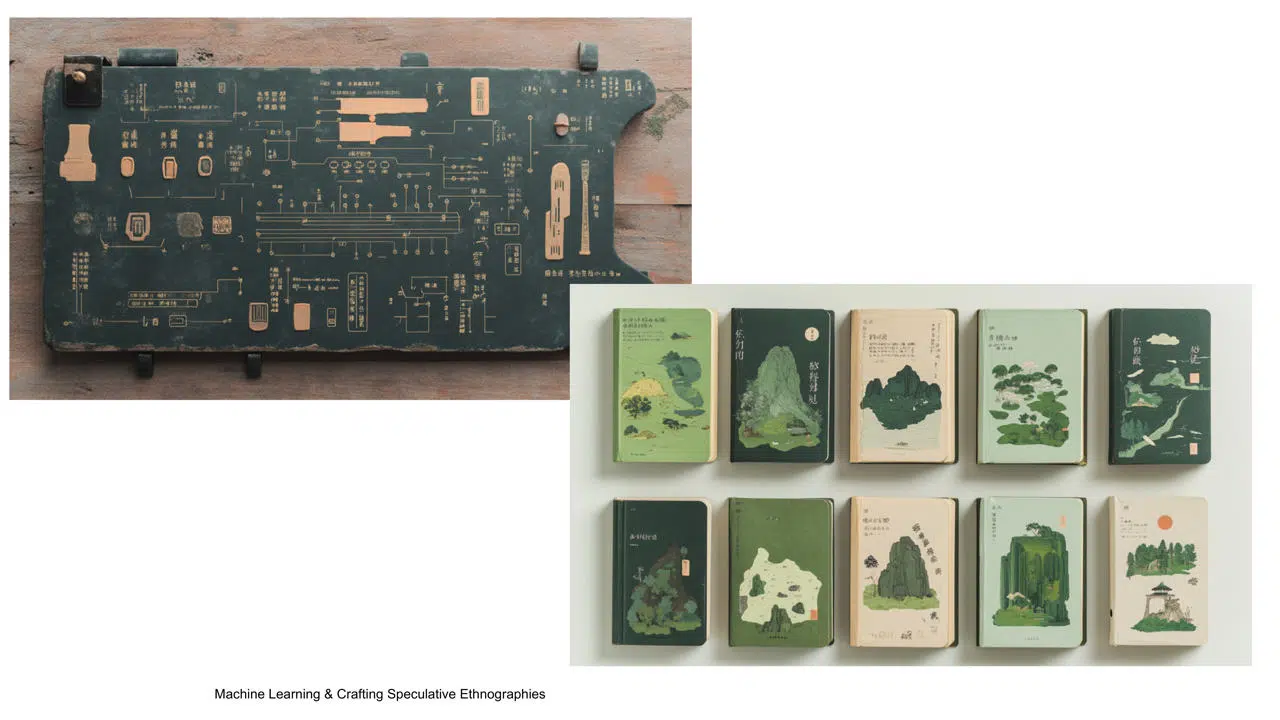

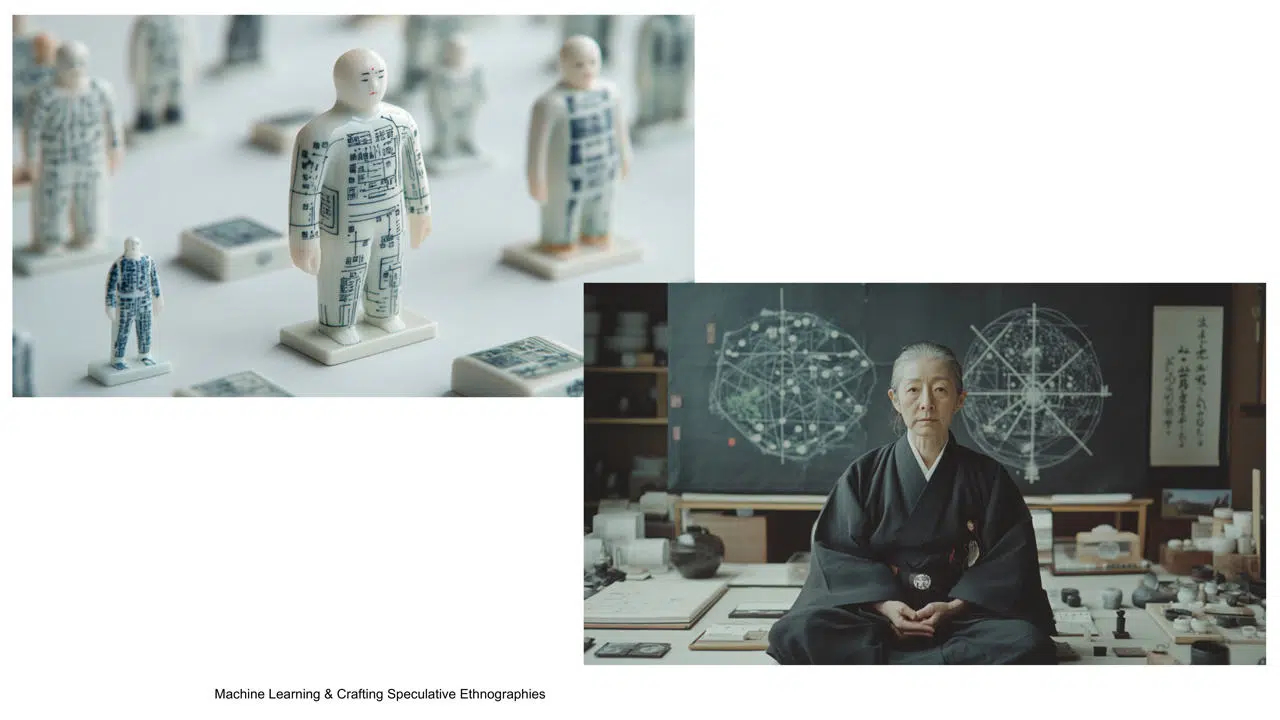

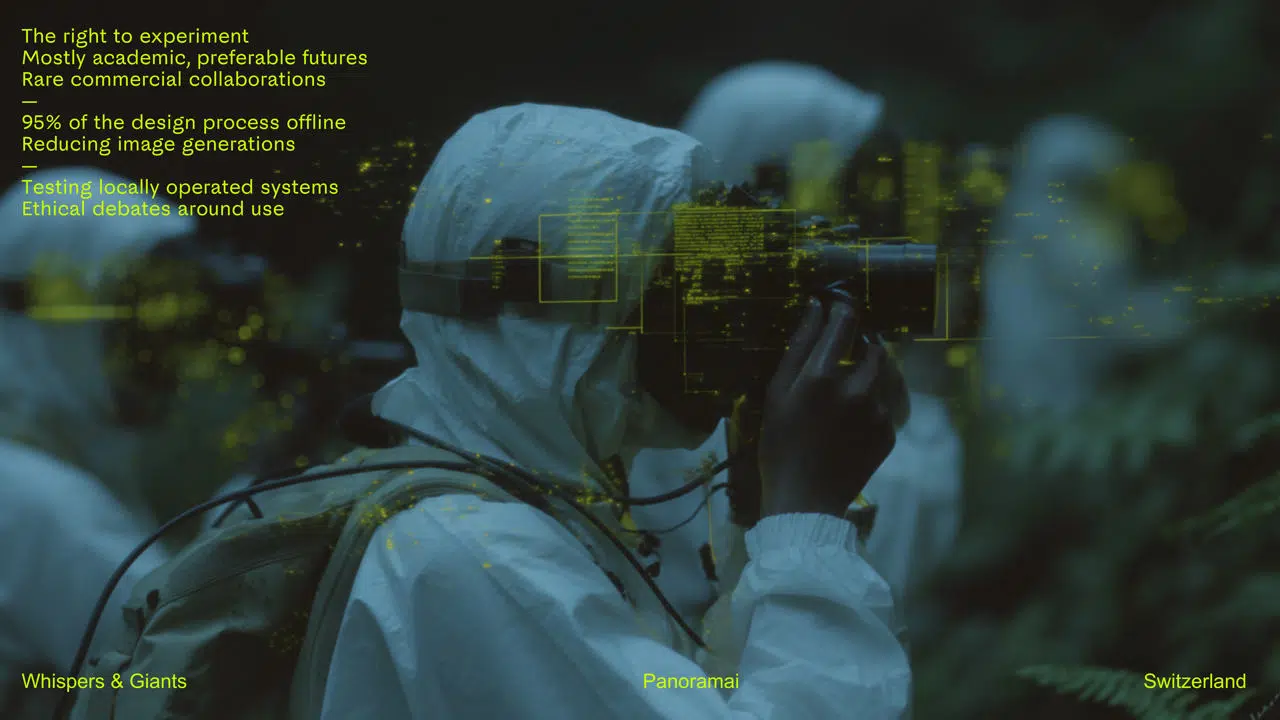

Last week at Panormai, I presented some of my work with AI-generated images, mainly using Midjourney as part of an augmented but critically constrained creative process. Invited by Raphaël Brinner, I emphasized that my approach defends the right to experiment, with a focus on academic inquiry and preferable futures, not market aesthetics.

This slow approach echoes older debates about photography. As Baudelaire warned in 1859, photography was once applauded for archiving memory, but feared for its ability to displace imagination. He wrote:

“If it is allowed to encroach on the domain of the impalpable and the imaginary… then woe to us!”

The same caution applies to our approach of generative tools today, exploring what AI images mean for the creator and its process.

I’m experimenting with workflows deliberately 95% offline. I minimize prompting and invest heavily in analog preparation—sketches, references, material studies—before generating anything. Image output is kept sparse, often a few generations per idea, to avoid aesthetic saturation, maintain conceptual clarity and limit server use.

I’m also exploring systems locally run on my solar infrastructure and small models to reduce dependency on large platforms. This includes ongoing ethical reflection on authorship, automation and visual culture’s complicity in normative projection.

In short, it’s not about producing more images, but questioning how and why we generate them at all.