Keynote slides, ready when you are — click to begin

All images in this presentation are made with AI using Midjourney.

In April 2025 I spoke at Armasuisse, invited by Quentin Ladetto on his Deftech platform about trust, not as a virtue or leadership trait but as a condition being reprogrammed. This post reclaims the narrative logic of that talk and re-anchors it in writing, not to summarize but to unpack the architecture of the argument. The core claim is simple: trust has not disappeared, it has been rerouted. And the systems now capturing it are not democratic, deliberative or slow. They are fast, opaque and frictionless.

Trust as structured vulnerability

Trust is often reduced to interpersonal dynamics or institutional ethics.

But that frame is too narrow. Trust is a mechanism for navigating complexity when verification is impossible. It’s how we act in systems too vast to see and too interdependent to control. When we drink milk without inspecting the milk quality, board a train without checking if the driver is sober, or click “accept” on a digital interface we don’t understand, we are operating on distributed trust.

What makes humans unique is our capacity to scale trust through institutions. We delegate credibility to structures like courts, schools, currencies and media so that we don’t have to verify every interaction ourselves. But when those institutions weaken or fail, the trust they upheld collapses with them. It doesn’t erode gradually. It vanishes quickly, like in a zombie movie where the social fabric holds until suddenly it doesn’t. The illusion of order breaks. Routines that once felt safe become risky overnight. What was implicit trust becomes ambient threat.

Pierre Bourdieu reminds us that trust isn’t always conscious. We inherit it socially through exposure to roles, rituals and institutional behaviors we’ve learned not to question. Trust is a form of structured vulnerability. It allows us to move without constantly reassessing our conditions of movement.

The end of the sovereign decider

This kind of trust, embedded, relational, assumed, coexists with the political myth of the sovereign individual. Rational, autonomous, self-directing: the imagined citizen of liberal democracy. But this figure is increasingly untenable. Decision-making now unfolds in ambient infrastructures scaffolded by recommending systems, interface defaults and behavioral nudges. We do not choose alone.

We perceive and decide in systems that pre-structure what’s seen and what’s possible. The question is no longer whether to trust but how to remain orientable in a world where sense-making is being quietly externalized.

Born into broken trust

For those born at the end of the millennium, trust is not a baseline. It’s a broken inheritance. Their formation has been shaped not by institutional stability but by recursive failure: the subprime crisis, surveillance leaks, climate betrayal, algorithmic manipulation, pandemic disinformation and epistemic polarization. Trust, for them, is not about confidence. It’s about navigation. It’s about who and what still offers traction.

September 11 attacks, Enron collapse, Iraq War, WikiLeaks, subprime mortgage crisis, Snowden affair, Fukushima disaster, Rana Plaza collapse, Haiti earthquake, European migration crisis, Rohingya genocide, drone attacks, Brexit, Panama Papers, Cambridge Analytica, Trump election, MeToo, opioid crisis, Black Lives Matter, Yemen famine, war in Tigray, Yellow Vest movement, massive cyberattacks, NotPetya, WannaCry, Pegasus revelations, COVID-19 pandemic, Beirut port explosion, Capitol assault, Ukraine invasion, Israel/Hamas, Gaza, Crowdstrike, Trump II, Los Angeles in flames.

AI doesn’t mirror, it organizes

Culturally, AI is framed through familiar binaries: apocalypse or assistant. Skynet versus Jarvis. Minority Report versus Her. But the real shift lies elsewhere. AI doesn’t simply help or threaten, it reorganizes. It reshapes how knowledge appears, how decisions are framed and how we relate to the unknown.

In my work I’ve been using the concept of capture not as metaphor but as a condition. Capture operates on multiple layers: bodily, cognitive and epistemic.

First, our biological rhythms are entrained. An example: how puffs merge nicotine and virality. They don’t just deliver nicotine. They combine individual chemical dependence with platform-level algorithmic spread, hijacking both neurobiology and visibility. Among teens, this creates a dual loop of addiction: one physiological through dopamine and nicotine, the other social through visibility and feed-based amplification. Vaping becomes a hybrid act of self-regulation and self-presentation, wired into the mechanics of attention economies.

Another sense of capture: Tinder maps intimacy to speed. Through geolocation and swipe mechanics, it accelerates relational encounters to a point of frictionless contact while simultaneously facilitating in some cases the viral spread of STDs. These are not isolated effects. Public health experts have begun describing them as hybrid epidemics, where algorithmic matchmaking structures biological exposure. Health authorities struggle to catch up with these algorithmically driven contagion phenomena.

Netflix, on the other hand, monetizes sleeplessness. The platform identifies sleep, a core biological function, as an obstacle to be overcome in the pursuit of engagement. Autoplay and cliffhanger sequencing override bodily signals in favor of continued attention. What is being captured is not only time or attention, but the regulation of rest itself. Sleep becomes reframed as missed opportunity for viewership, recoded from recovery to interruption in the logic of extraction. Around the world, sleep patterns are terraformed by platforms, deeply altering the societal health terrain.

The shift from epistemic to algorithmic trust

This reconfiguration of trust is structural. It is not just about where we place trust but what kind of trust we now rely on.

We are moving away from epistemic trust, which depends on shared deliberation, contradiction and slow mediation, toward algorithmic trust, which hinges on usability, immediacy and predictive consistency.

More and more, we trust systems that work over systems that represent. Apps deliver. Logistics function. Interfaces rarely crash. These systems don’t require belief. They only require interaction. You don’t have to trust the platform, you just tap the button and get the result.

Institutions, by contrast, demand belief while often delivering contradiction. They ask for legitimacy while producing incoherence. The result is a rerouting of confidence, not into deeper engagement but into shallow, predictable, functional flows. What works replaces what matters. In doing so, we are offloading a fundamental societal function, collective sense-making, to commercial platforms, most of them based in the current authoritarian United States and accountable primarily to shareholders, not publics. These actors now shape not only how we access information but how we determine credibility, sequence relevance and recognize coherence. What was once a civic process is now an infrastructure-as-a-service model running beneath the surface of everyday life. Trust becomes a UX shortcut outsourced to a distant server.

Orientation dissolves

These transformations are not neutral. They are embedded in techno-financial architectures optimized for adherence and frictionless performance. Platform logic absorbs trust and operationalizes it, not as shared meaning but as behavioral compliance. As bodily capture rewires rhythms, cognitive capture outsources decision-making and epistemic capture flattens doubt into coherence, something deeper is lost: the conditions for orientation.

When meaning arrives pre-assembled and contradiction is filtered out, we do not simply lose truth. We lose the ability to navigate complexity with others. What disappears is not knowledge but the possibility of shared sense-making.

Governance becomes interface

The erosion of intermediary institutions, schools, unions, local media, civic spaces is not an accidental byproduct. It’s a strategic bypass. Platforms offer smoother alternatives to collective deliberation. Governance becomes interface. Conflict becomes friction. Feedback becomes compliance. But this architecture falters under pressure. In the absence of institutions that can absorb conflict, society becomes reactive, brittle, fragmented. Crisis moves through interfaces faster than sense-making can catch up.

War no longer begins at borders. It begins in minds. And the battlefield is trust.

Legacy tools for post-reality conditions

We are confronting 21st-century destabilization with 19th-century institutions and 20th-century myths. In doing so, we risk losing more than capacity. We risk losing the shared memory and mutual intelligibility that democracy depends on. Trust, once the outcome of slow deliberation, is now a parameter, calculated, tuned, optimized. It becomes a system setting, not a public agreement. If we are to resist this drift, we must design for reorientation. We must reintroduce friction, ambiguity, contradiction, features erased by functional systems in the name of usability. Trust must be treated not as a UX performance indicator but as a contested, political infrastructure.

Toward counter-infrastructures of trust

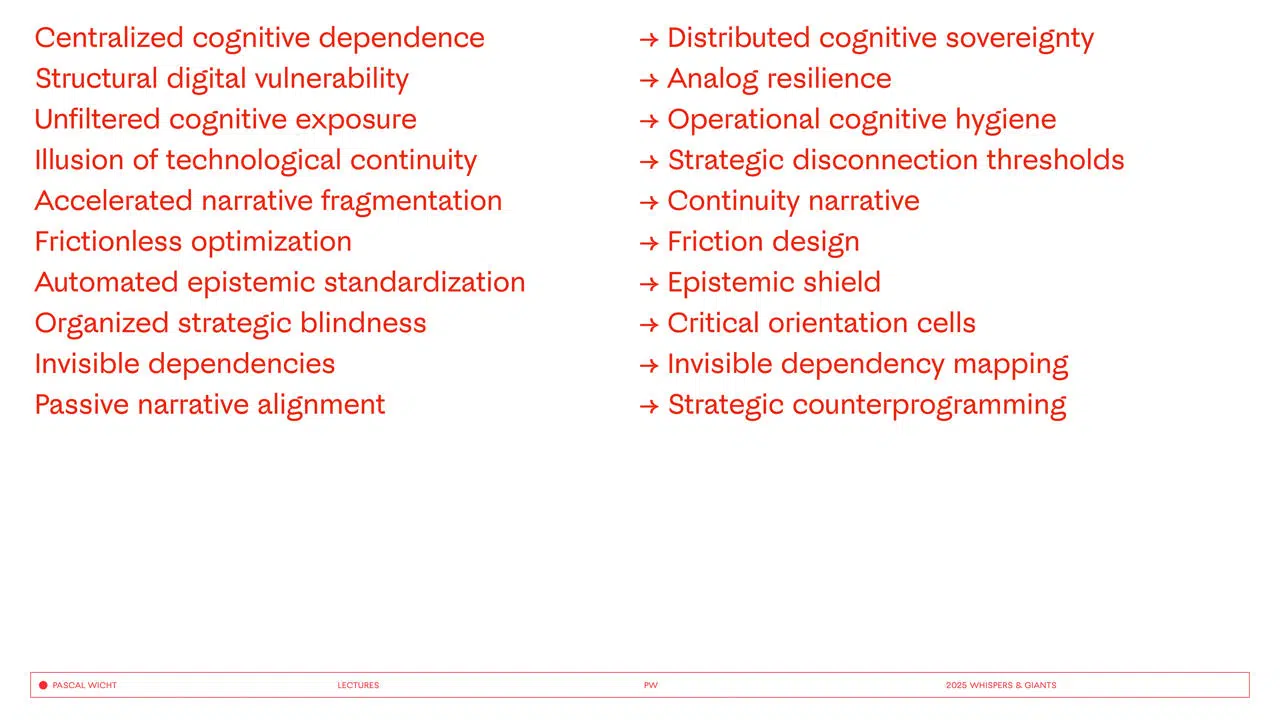

If trust has been rerouted through algorithmic systems, the task is not to restore the past. It’s to construct scaffolds for cognitive sovereignty. This means building epistemic shields, not to block data but to slow it down, to make time again, to restore the conditions for judgment. It means setting strategic disconnection thresholds, deliberate exits from the loop, not to disconnect from the world but to regain distance from systems that no longer allow orientation.

It means practicing operational cognitive hygiene, protecting the mental space where doubt, contradiction and reframing can still occur. Hygiene not as purity but as resilience. It requires mapping invisible dependencies, the hidden architectures shaping what we know and how we move. Without this, transparency is theater. And it demands that we seed critical orientation cells, distributed autonomous spaces for sense-making within noise. Not think tanks, not innovation labs, but environments structured for friction, for unlearning, for rehearsing other kinds of coherence.

This isn’t a roadmap. It’s the scaffolding of a future we don’t yet know how to trust. But if trust is now coded, then so must be its refusal.

Not just how to trust but where.

And with whom.